Citation

Lou, D. (2019), “Two fast prototypes of web-based augmented reality enhancement for books”, Library Hi Tech News, Vol. 36 No. 10, pp. 19-24. https://doi.org/10.1108/LHTN-08-2019-0057

Abstract

Purpose

The purpose of this paper is to identify a light and scalable AR solution to enhance library collections.

Design/methodology/approach

The author first did research to identify the major obstacle in creating a scalable AR solution. Next, she explored possible workaround methods and successfully developed two prototypes that make the current web-based AR work with ISBN barcode.

Findings

Libraries have adopted Augmented Reality (AR) technology in recent years mainly by developing mobile applications for specific education or navigation programs. [1] Yet we have not seen a straight-forward AR solution to enhance a library collection. One of the obstacles lies in finding a scalable and painless solution to associate special AR objects with physical books. At title level, books already have their unique identifier – the ISBN number. Unfortunately, marker-based AR technology only accept two-dimensional (2-D) objects, not the one-dimensional (1-D) EAN barcode (or ISBN barcode) used by books, as markers for technical reasons. In this article, the author shares her development of two prototypes to make the web-based AR work with the ISBN barcode. With the prototypes, a user can simply scan the ISBN barcode on a book to retrieve related AR content.

Research limitations/implications

This paper mainly researched and experimented with web-based AR technologies in the attempt to identify a solution that is as platform-neutral as possible, and as user-friendly as possible.

Practical implications

The light and platform-neutral AR prototypes discussed in this paper have the benefits of minimum cost on both the development side and the experience side. A library does not need to put any additional marker on any book to implement the AR. A user does not need to install any additional applications in his/her smartphone to experience the AR. The prototypes show a promising future where physical collections inside libraries can become more interactive and attractive by blurring the line of reality and virtuality.

Social implications

The paper can help initiate the discussion on applying web-based AR technologies to library collections.

Originality/value

This paper is the first to share the development experience of a web-based AR solution that works with ISBN barcode.

Keywords

Augmented Reality, API, Web-based AR, Web-based Barcode Scanner, Web-based Barcode Reader, Makerless AR, Marker-based AR, ISBN Barcode, Collections, Books

Introduction

As Author and Envisioneer M. Pell nicely put it, augmented reality (AR) is the incredible media to “surfacing the invisible”. AR is an interactive experience of blending computed-generated objects seamlessly into the view of the real world. In recent years, we have seen various AR applications that visualize the previously invisible data in different fields, from health care [2], education, real estate [3] to indoor/outdoor navigations [4] .

This makes one wonder what type of invisible data a library collection possesses that may appeal to our customers, as well as what could serve as the most cost-effective way to make that data visible through AR.

Palo Alto City Library started to design and implement web-based AR in 2018. In a regional conference hosted by the Library, attendees were invited to open AR web pages on their smartphones and scan 2-D markers around the room to experience different AR scenes. The author has explained how such an AR solution was developed in a recent Code4Lib article [5] , where she also suggested the idea of adopting the solution to enhance a library collection. But it is quite a different story when one tries to scale things up from an activity with only one AR marker and a few AR objects to the collection level with thousands of books to be associated to AR content. The major obstacle lies in finding a scalable and painless solution to create links between AR content and books. At title level, books already have their unique identifier – the ISBN number. Unfortunately, marker-based AR technology only accept two-dimensional (2-D) objects as markers, not the one-dimensional (1-D) EAN barcode (or ISBN barcode) used by books, for technical reasons.

Explanation on AR and AR Markers

Generally speaking, AR technology can be divided into two major categories: marker-based AR and markerless AR [6] . Maker-based AR needs a specific marker to trigger an AR scene. With this method, the association between the marker and the AR scene is predefined. This is the technology used in the AR activity at Palo Alto City Library mentioned in the previous paragraph. In contrast, markerless AR doesn’t need special markers to identify the place where a virtual object should appear. Instead, it uses a technology called Simultaneous Location and Mapping (SLAM) [7] to map an unknown environment in real time and to adjust virtual objects in it accordingly. Hence, markerless AR can render an AR scene realistically in almost any real-world environment. Pokemon Go is probably the best known example in this case.

Based on this understanding, if a specific AR scene is designed to be triggered by a specific book, marker-based AR seems to be the more appropriate option at first glance: the association between a book and an AR scene should be unique and needs to be predefined.

Unfortunately, it is a daunting task to adopt this solution for a library collection. From technical point of view, marker-based AR can only accept 2-D objects as markers, such as 2-D images, 2-D barcode, etc.. This is because a 2-D object, like a QR code, represents rich geometric patterns that contain the necessary information about the real world required by AR display: the position, the scale, and the rotation. Based on such information returned from a 2-D object, an AR application can then construct a believable AR scene. In contrast, the ISBN barcode is a 1-D barcode representing information in parallel lines instead of geometric patterns. Hence it is impossible to capture all the information required to build an AR scene. [8] In the scenario of adopting marker-based AR for this project, not only a unique AR scene has to be developed for every book (or a group of books) in a collection, but also an additional AR marker has to be put on every book in order to get it associated with an AR scene. This can quickly turn into a time-consuming, labor-intensive and unsustainable project.

On the other hand, the ideal solution is expected to function in a quite different way. A web-based barcode reader scans the ISBN barcode on a book. The returned ISBN information, instead of the data from a 2-D marker, is used to trigger a specific web-based AR scene. This ideal solution has three components: a web-based barcode reader, some attractive web-based AR content for every book, and a connection between the ISBN information returned by the barcode reader and the target AR content.

A Web-based Barcode Reader

The web-based barcode reader used in the two prototypes is called QuaggaJS [9]. QuaggaJS is a barcode reader entirely written in JavaScript that can be enabled in a web page. It supports real- time scanning of various types of barcodes, including the EAN barcode (ISBN barcode) . Any browser that supports the webcam video streaming function works with QuaggaJS, which leaves Internet Explore (IE) to be the major exception [10].

There are several different ways to implement QuaggaJS. The most straight-forward one is to download the whole package from the GitHub repository [9]. To build the prototypes, a good starting point is to adopt the example page in the package, live_w_locator.html , which is used to detect various barcodes from live camera streaming. This HTML file already provides a reference to QuaggaJS at the end of the <body> tag, and it also provides a reference to a customized javascript live_w_locator.js that utilizes the standard QuaggaJS functions.

By modifying the decoder element in the init method in live_w_locator.js, it is possible to make the EAN barcode as the default barcode type to detect:

1 | decoder: { |

The web interface can be simplified to only keep the webcam stream view element in live_w_locator.html. The cleaned up live_w_locator.html looks similar to this:

1 | <!doctype HTML> |

The author also made changes in Javascript and CSS to change the view of the video streaming display. To verify the returned ISBN information, the method Quagga.onDetected(callback) in live_w_locator.js was modified to log the ISBN information in console at this stage:

1 | console.log(code); |

A working web-based barcode scanner is now in place. It scans EAN barcode and returns the ISBN information.

Use a Data Pool as Connector

The next step is to use the obtained ISBN number as a unique identifier to query for relevant AR scenes. The author tried to identify the invisible data that is worth displaying through AR for a library collection.

Generally speaking, web-based AR content usually serves two purposes – to display additional data and information; and/or to create an aesthetic and entertaining effect. Therefore, for the prototypes, one has the option to use AR content to provide additional information about a book, to create some visual effect, or to accomplish both.

The physical book already contains bibliographic information, including but not limited to title, author, publishing information, cover image, number of pages and the content of the book itself. However, it does not contain any real-time social-media-like information. For instance, the physical book usually does not contain the real-time rating information.

On the other hand, the social cataloging website Goodreads has accumulated a decent amount of social-media-like data about books. What’s more, Goodreads provides an API for developers to access part of the data, including the average rating and count of ratings for many books. If this data is displayed in AR for books in a collection, it might be of interest to a reader. Alternatively, if a library’s catalog already provides an API to access similar data, it is recommended to use the library’s API instead of the Goodreads API in order to retrieve high quality and more community-centered information for the library’s specific collection.

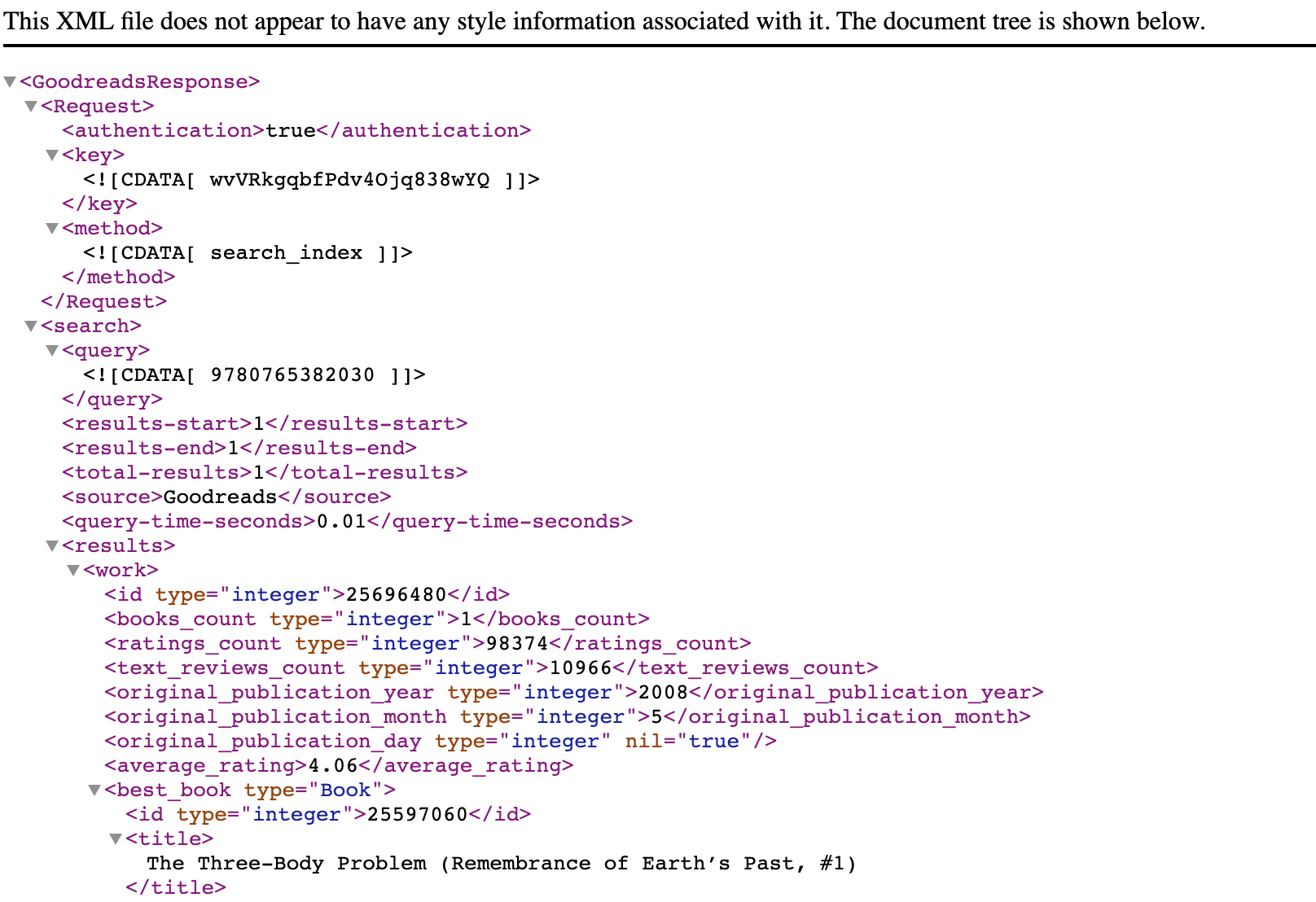

The author registered a developer key to start using the Goodreads API [11] for the prototypes. The search.books API provides the ability to searche all books in the title, author and ISBN fields. The matched result is returned in XML format (Fig. 1).

Fig 1. A snapshot of the typical XML result returned from Goodreads search.books API

The Goodreads API request is built and fired on the fly when the web-based barcode reader detects an ISBN number. The search.books API URL is constructed using the following format:

1 | https://www.goodreads.com/search?format=xml&key=[DEVELOPER KEY]&q=[QUERY]" |

[DEVELOPER KEY] is replaced with the real developer key registered at Goodreads, and [QUERY] is replaced with a real query, which is an ISBN number in this case.

jQuery AJAX [12] is used to handle the API request. AJAX is an acronym standing for Asynchronous JavaScript and XML . It can update a webpage without reloading the whole page.

Below is some sample code to fetch and parse result from the Goodreads API using AJAX.

1 | $.ajax({ |

The parsed Goodreads API result is sent to the AR web page and meanwhile a redirection to that page takes place:

1 | window.location.href = "models.html&isbn="+code+"&title="+title+"&author="+author+"&average_rating="+average_rating+"&rating_count="+rating_count; |

Generate On-the-fly AR Content

Next step is to create the AR web page. With the rating data returned from Goodreads API, it is possible to generate on-the-fly AR scenes by scripting in A-Frame [13] and AR.js [14]. A-Frame is an open source web framework for building virtual reality experiences which work on Vive, Rift, desktop and mobile platforms. AR.js is a web-based AR solution that is standard and works with any device with webgl [15], webrtc [16], and a camera.

In the head of the AR HTML file, references to both the AR.js JavaScript library and the A-Frame JavaScript library were created.

1 | <head> |

In the body part of the same HTML file, an AR scene with the ability to use the webcam was created:

1 | <a-scene embedded arjs renderer="precision: mediump"> |

A-Frame offers flexible ways to control the scene and its entities by using JavaScript and DOM [17] APIs. Hence it is possible to add new entities on the fly to the AR scene at the moment of receiving the parsed data from the Goodreads API. For example, the average rating’s number can get converted into a row of 3-D spheres, similar to the 2-D star ratings in plain old web pages. Below is the JavaScript code to add a row of complete spheres on the fly:

1 | var i; |

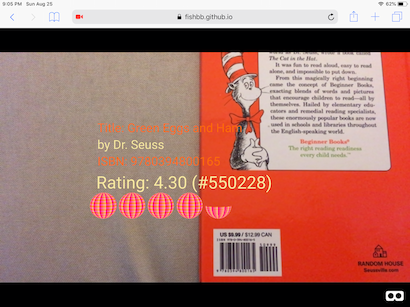

With this, the first prototype of AR enhancement for a library collection is completed. All three components are in place – a web page that scans the ISBN barcode, the Goodreads API that fetches relevant data by the returned ISBN number, and an AR scene created with the returned Goodreads metadata (Fig. 2).

Fig. 2 Display AR rating information by scanning the ISBN barcode of the book Green Eggs and Ham in Safari browser on an iPad. The book has 550,228 ratings and an average rating of 4.30. AR content generated on the fly with A-Frame and AR.js.

One Major Drawback and An Alternative Method

There is one major drawback of the first prototype though. When a user tilts the mobile device, the virtual objects refuse to change accordingly. This is because AR.js only supports marker-based AR at the moment of writing this article. In other words, SLAM is not integrated into AR.js yet. The first prototype fakes the effect of markerless AR with the marker-based AR.js, but the AR content displayed in this way lacks a marker as a reference point to track changes taking place in the real world.

The alternative method is to use a real markerless AR technology. For example, 8th Wall [18], a company received the Best Auggie Reward in the category of Best Developer Tool at Augmented World Expo 2019 [19], provides tools to make markerless web-based AR that works on every mobile device. The author registered the basic free tier in 8th Wall [20]. There are three different workspaces to choose from in 8th Wall console. The author created an AR Camera workspace, which is the most appropriate approach for the second prototype. A Web AR Camera in 8th Wall is a web page that contains one pre-defined AR object of your choice in an AR scene. This convenient solution comes with a cost. Since the page is predefined, it is impossible to embed Goodreads data into the AR scene on the fly.

That being said, AR Camera can be used to create interactive and impressive AR experience. The free basic tier allows up to 10 AR Cameras and up to 1,000 views for all AR Cameras per month. It is not ideal but sufficient for the second prototype.

Making an AR Camera in 8th Wall is fairly straightforward. From the author’s experience, the most difficult part lies in convert 3D objects into the required .GLB format, the only format acceptable by 8th Wall. The author downloaded 3D models from Free3D [21], and converted them to .GLB files by using a combination of tools, such as Blender [22], Unity [23] and an online 3D file converter [24]. One cool feature in AR Camera is that it supports two interaction modes with an uploaded 3D model – “hold to drag” or “tap to move”. A developer can choose which mode to use depending on the use case.

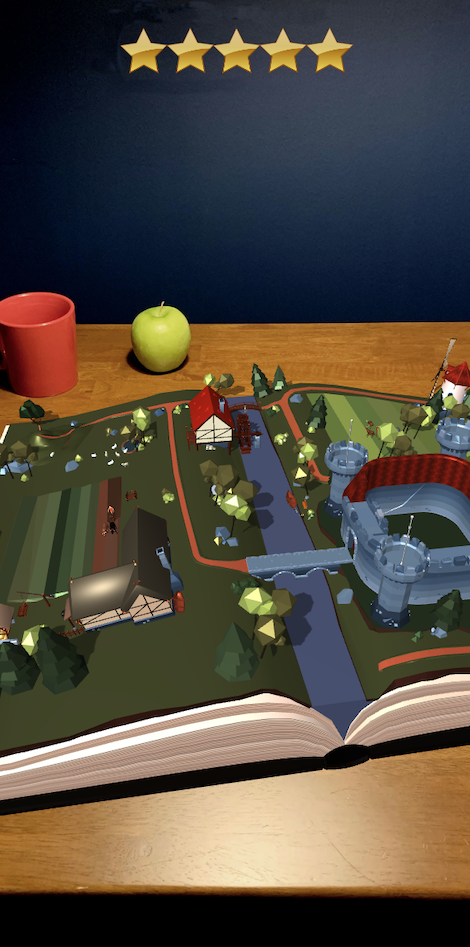

Six AR Cameras were created in this way with a rating scale from 1 to 5, plus an unknown rating level for books that have no data available in Goodreads. For instance, an AR scene for a book with 5-star rating shows five stars on the top and a 3D medieval magic book model underneath it (Fig. 3). In contrast, an unknown rating level shows a question mark on the top and a 3D UFO model (Fig. 4).

Fig. 3 The design of an AR scene for a book with an average rating of four stars.

Fig. 4 The design of an AR scene for a book with unknown average rating.

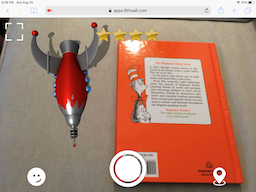

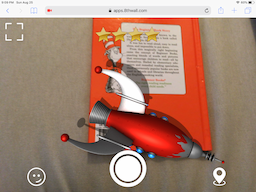

A similar mechanism used in the first prototype was adopted to retrieve AR content. The average rating information returned from the Goodreads API is processed to fetch the matched 8th Wall AR Camera page, followed with a redirection to the page. The AR models are interactive and a user can tap on the screen to make it move around (Fig. 5 and Fig. 6).

Fig. 5 Display a rating of four stars and a 3D rocket model by scanning the ISBN barcode of the book Green Eggs and Ham in Safari browser on an iPad. AR content generated with 8th Wall AR Camera.

Fig. 6 A user can tap on the screen to make the 3D rocket in Fig.5 flying around.

Conclusion

With the advancement of web-based AR technologies and the promising of faster internet speed, the prototypes discussed in this article show a promising future where physical collections inside libraries can become more interactive and attractive by blurring the line of reality and virtuality.

The two prototypes are published to the Internet via Github Pages [25]. Anyone who is interested can bookmark the web pages and start testing with different books. With the first URL [26] that utilizes AR.js, one can test it out on almost any modern devices, such as laptops, computers, smartphones and tablets. With the second URL that integrated with 8th Wall Cameras [27], one can experience the AR content with mobile devices before the 1,000 views cap per month is met.

[1] DFREE (2018). Keeping Up With… Augmented Reality. [online] Association of College & Research Libraries (ACRL). Available at: http://www.ala.org/acrl/publications/keeping_up_with/ar [Accessed 28 Aug. 2019].

[2] PBS NewsHour. (2019). Virtual reality allows neurosurgery patients to ‘tour’ their own brains. [online] Available at: https://www.pbs.org/newshour/show/virtual-reality-allows-neurosurgery-patients-to-tour-their-own-brains [Accessed 28 Aug. 2019].

[3] Young Entrepreneur Council (2019). Building AR/VR Solutions That Appeal to Consumers: Three Tips For Entrepreneurs. Forbes. [online] 10 Jul. Available at: https://www.forbes.com/sites/theyec/2019/07/10/building-arvr-solutions-that-appeal-to-consumers-three-tips-for-entrepreneurs/#1dee30a465e1 [Accessed 28 Aug. 2019].

[4] Grey, E. (2018). Sweden trials augmented reality in the country’s busiest train station. [online] Railway Technology. Available at: https://www.railway-technology.com/features/sweden-ar-station-navigation/ [Accessed 28 Aug. 2019].

[5] Lou, D. (2019). Create Efficient, Platform-neutral, Web-Based Augmented Reality Content in the Library. Code4Lib Journal, [online] (45). Available at: https://journal.code4lib.org/articles/14632 [Accessed 28 Aug. 2019].

[6] Trekk.com. (2017). Marker and Markerless Augmented Reality. [online] Available at: https://www.trekk.com/insights/marker-and-markerless-augmented-reality [Accessed 28 Aug. 2019].

[7] Wikipedia Contributors (2019). Simultaneous localization and mapping. [online] Wikipedia. Available at: https://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping [Accessed 28 Aug. 2019].

[8] jetmarkingadmin (2018). The Difference Between 1D and 2D Barcodes. [online] Jet Marking. Available at: https://jet-marking.com/difference-1d-2d-barcodes/ [Accessed 28 Aug. 2019].

[9] Github.io. (2017). QuaggaJS, an advanced barcode-reader written in JavaScript. [online] Available at: https://serratus.github.io/quaggaJS/ [Accessed 28 Aug. 2019].

[10] Caniuse.com. (2019). Can I use… Support tables for HTML5, CSS3, etc. [online] Available at: https://caniuse.com/#feat=stream [Accessed 28 Aug. 2019].

[11] Goodreads.com. (2015). API. [online] Available at: https://www.goodreads.com/api/index [Accessed 28 Aug. 2019].

[12] JS Foundation - js.foundation (2014). jQuery.ajax() | jQuery API Documentation. [online] Jquery.com. Available at: https://api.jquery.com/jquery.ajax/ [Accessed 28 Aug. 2019].

[13] A-Frame. (2019). A-Frame – Make WebVR. [online] Available at: https://aframe.io/ [Accessed 28 Aug. 2019].

[14] jeromeetienne (2019). jeromeetienne/AR.js. [online] GitHub. Available at: https://github.com/jeromeetienne/AR.js [Accessed 28 Aug. 2019].

[15] WebGL - OpenGL ES for the Web (2011). WebGL - OpenGL ES for the Web. [online] The Khronos Group. Available at: https://www.khronos.org/webgl/ [Accessed 28 Aug. 2019].

[16] Webrtc.org. (2017). WebRTC Home | WebRTC. [online] Available at: https://webrtc.org/ [Accessed 28 Aug. 2019].

[17] MDN Web Docs. (2019). Introduction to the DOM. [online] Available at: https://developer.mozilla.org/en-US/docs/Web/API/Document_Object_Model/Introduction [Accessed 28 Aug. 2019].

[18] 8thwall.com. (2018). 8th Wall | Augmented Reality. [online] Available at: https://www.8thwall.com/ [Accessed 28 Aug. 2019].

[19] Awexr.com. (2019). Announcing the AWE 2019 Tenth Annual Auggie Award Winners by AWE Team. [online] Available at: https://www.awexr.com/blog/91-announcing-the-awe-2019-tenth-annual-auggie-award- [Accessed 28 Aug. 2019].

[20] 8thwall.com. (2019). 8th Wall Console. [online] Available at: https://console.8thwall.com/sign-up?type=camera [Accessed 28 Aug. 2019].

[21] Free3d.com. (2018). 3D Models for Free - Free3D.com. [online] Available at: https://free3d.com/ [Accessed 28 Aug. 2019].

[22] Blender Foundation (2019). blender.org - Home of the Blender project - Free and Open 3D Creation Software. [online] blender.org. Available at: https://www.blender.org/ [Accessed 28 Aug. 2019].

[23] Unity Technologies (2019). Unity - Unity. [online] Unity. Available at: https://unity.com/ [Accessed 28 Aug. 2019].

[24] Creators3d.com. (2019). Creators 3D - Jobs for Professional Artists. [online] Available at: https://www.creators3d.com/online-viewer [Accessed 28 Aug. 2019].

[25] Fishbb 冒泡的小鱼儿. (2019). AR for Books. [online] Available at: https://fishbb.github.io/article/isbn.html [Accessed 28 Aug. 2019].

[26] Fishbb 冒泡的小鱼儿. (2019). Static AR for Books by Dan Lou. [online] Available at: https://fishbb.github.io/ar/isbn.html [Accessed 28 Aug. 2019].

[27] Fishbb 冒泡的小鱼儿. (2019). SLAM AR for Books by Dan Lou. [online] Available at: https://fishbb.github.io/ar/isbn8.html [Accessed 28 Aug. 2019].

Author Accepted Manuscript (AAM) Deposit License

Emerald allows authors to deposit their AAM under the Creative Commons Attribution Non-commercial International Licence 4.0 (CC BY-NC 4.0). To do this, the deposit must clearly state that the AAM is deposited under this licence and that any reuse is allowed in accordance with the terms outlined by the licence. To reuse the AAM for commercial purposes, permission should be sought by contacting permissions@emeraldinsight.com.

For the sake of clarity, commercial usage would be considered as, but not limited to:

o Copying or downloading AAMs for further distribution for a fee;

o Any use of the AAM in conjunction with advertising;

o Any use of the AAM by for promotional purposes by for-profit organisations;

o Any use that would confer monetary reward, commercial gain or commercial exploitation.

Emerald appreciates that some authors may not wish to use the CC BY-NC licence; in this case, you should deposit the AAM and include the copyright line of the published article. Should you have any questions about our licensing policies, please contact permissions@emeraldinsight.com.